These days at Testbirds, we’re talking a lot about chatbots, machine learning, and conversational agents. While these topics have always interested us, a lot of the excitement is due to the fact that a few weeks ago, we announced a new partnership with Cognigy, the market leader in conversational AI. Our partnership is already starting to bear fruit – on May 28th, 2019, we’ll be hosting a webinar together with Cognigy focusing on chatbots and conversational AI. If you are interested in participating, have a look here.

Testing Chatbots

We cannot stress the importance of frequent testing and training of chatbots, especially since not only the technological standards but also what users expect from chatbots are rising. In one of our former blog posts, we discussed how conversational agents work and can be improved in general. Now, we want to take you behind the scenes on a more operational level – what exactly does our work with chatbots testing look like (e.g., how do we test chatbots using the power of crowdtesting).

Customer is Key – Pre-development phase

Sure, chatbots are popular – Gartner even predicts that in two years, 50% of enterprises will spend more on chatbots than on apps – but when integrating conversational AI into a company’s customer facing processes, it is crucial to not only implement it for the sake of it, but to always keep the initial purpose in mind: customer satisfaction. Does the customer really need a chatbot to get information about the opening hours of a store? Maybe it’s faster to find through a simple search of the site or the internet. At Testbirds, we have over 350,000 real testers worldwide that help us to identify exactly this: real customer needs.

It’s not often the case that we get involved in a client’s chatbot implementation process before its launch, although we think it makes so much sense. The client gets natural feedback from their target customers regarding how and in what situations they would interact with a chatbot, all in a variety and density no product team could ever come up with.

Chatbot-Testing – Beta-phase

Most of the chatbot test projects we do with our clients start during prototyping phase. In this stage, optimization processes are still very flexible, so the feedback can be implemented directly after testing. With crowdtesting, the capabilities of the chatbot can be tested under real conditions by the target customers. Below is a bit of an insight into one of our recent tests:Test CaseThe company of a client designed a chatbot to offer customers an additional channel in which they can inform themselves about several different topics. Our client wanted to test if this bot delivers information correctly and quickly, while behaving as naturally as possible.

Test Set-up

The designated Testbirds Project manager chose a test group of 12 testers from our crowd (matching the target group of the client’s product, of course). The testers then performed 100 unique interactions each with the chatbot, with actions spanning five different categories. The Testers had to document their findings as follows:

- “Question/Interaction”: what question was asked by the tester

- “Bot Answer / Reaction”: what was the chatbot’s answer

- “The bot`s answer was”: the testers chose an appropriate answer from a drop-down list containing satisfying, unsatisfying, wrong, asks to try again, no answer/reaction and other

After performing and documenting 100 unique actions with the bot, the testers also had to answer another five general questions about their experience with the chatbot:

- Which of the following words best describes your interaction with the bot? (please choose three to six words): human, complicated, intuitive, unpleasant, inviting, frustrating, simple, awkward, pleasant, dismissive, motivating, technical

- How would you rate the language used by the bot? (1 = very natural; 6 = very unnatural)

- How would you rate your overall experience with the bot? (1 = very satisfying; 6 = very disappointing)

- How likely is it that you would use this chatbot in the future? (1 = very likely; 6 = very unlikely)

- Is there anything you can think of that you especially liked or disliked about the bot?

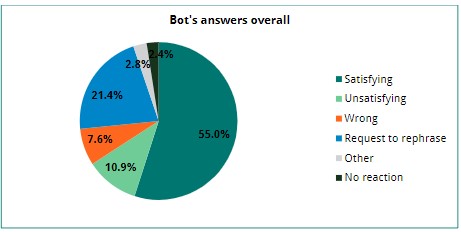

Results

Normally a test such as this would take two to three days to set-up. After the testing phase, the Testbirds Project Manager compiles all results in a final report and presents findings and recommendations for actions to the client. If all goes well and the test purpose and different testing categories are well prepared by the client, a Chatbot Usability Test can be executed in only one week. What’s better is that the collected questions and interactions provide additional training data for the chatbot.

Chatbot Training – Continuous Testing Process

As stated above, the technological standards of Artificial Intelligence are constantly improving and the variety of different ways to ask for information makes broad language training crucial. At Testbirds, the natural diversity of the crowd offers a linguistic variety that cannot be guaranteed by developers or individual testers and can deliver hundreds of new data sets the client then can implement into their chatbot training process. UX and QA chatbot testing should not be a one-time project and needs to be integrated as a continuous QA-process to ensure that the chatbot performs on the highest possible level and adds real value for the costumer. With an ongoing testing set-up, the testing and training criteria can be modified at each iteration thereby it is possible to cover more and more topics or to expand the tester group. This way, the chatbot gets better trained at every test.

Interested in Learning More?

On the May 28th, 2019 at 17:00 CEST, we will go much more into detail and talk about how to build and optimize UX of conversational agent and chatbot as well as reveal the “10 Dos and Dont’s when building a chatbot”.Until then, keep your customers happy and your robots too!